Social robots are increasingly becoming part of our society. In this sense, such robots’ interaction capabilities depend largely on their ability to detect and respond to stimuli in their environment as humans would. This paper describes a bio-inspired exogenous attention-based perception architecture for social robots. Exogenous attention regulates those involuntary attentional processes driven by the salience of perceived stimuli. The architecture incorporates bio-inspired concepts, such as inhibition to return, focus of attention selection and detecting salient stimuli, that have been validated on a real social robot. We have integrated several multimodal mechanisms for recognising salient visual, auditory, and tactile stimuli. Then, the attention architecture’s performance was evaluated by comparing it to a ground truth gathered from interactions of 50 users, obtaining comparable responses in real-time.

https://www.sciencedirect.com/science/article/pii/S0957417424004883

Experiment settings and data collection process

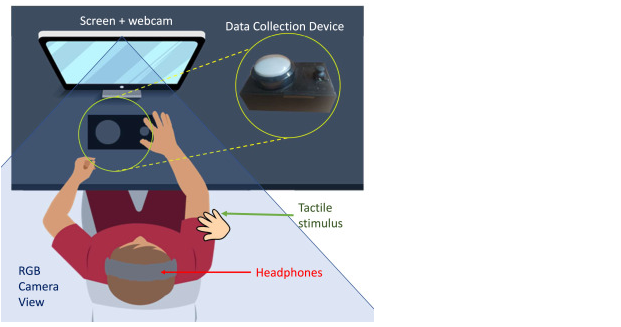

With the data collection process, our goal was to log the participants’ responses to three types of stimuli: (i) videos with different stimuli displayed on a screen; (ii) binaural stimuli (Møller, 1992) (360°sounds) emitted through headphones; and (iii) tactile stimuli caused by the experimenter touching the participants in the arms. Section 5.1.3 provides more details about the dataset employed and the stimuli collected.

For logging the participants’ responses to the auditory and tactile salient stimuli in real time, we used a data collection device (DCD) consisting on a box with an Arduino microcontroller that reads information from a joystick and a button. Participants used the joystick to indicate the direction of the sound, and pressed the button whenever they received a salient tactile stimulus. Regarding the visual stimuli, we used the GazeRecorder9 web app for detecting the areas of the screen the user paid attention to when watching the videos. This application uses a webcam to collect data from the visual fixations on the screen during the playback of the different videos.

During the data collection process, participants were first greeted by the experimenter, and then they were introduced to the experimental setup (shown in Fig. 10). Participants were asked to keep their hands on the DCD during the entire test to minimise their response time. The experimenter described the goal of the test, the stimuli the participants will be presented with, and what they should do after perceiving each stimulus (e.g., “You have to press the button whenever you feel that someone has touched you”, “You will point with the joystick towards the direction the sound you hear is coming from”). Finally, the GazeRecorder application was calibrated to acquire the visual data accurately.