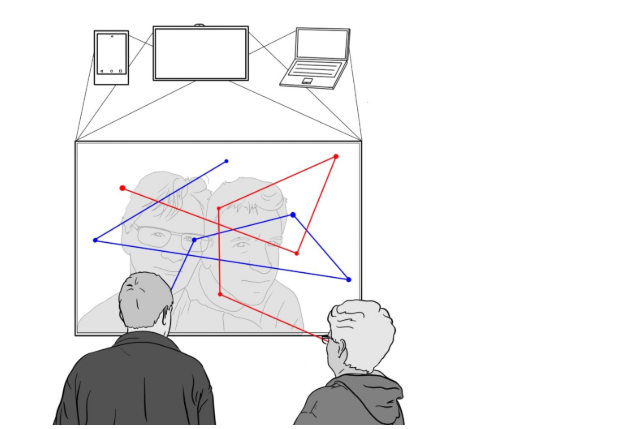

Existing gaze-based methods for user identification either require special-purpose visual stimuli or artificial gaze behavior. Here, we explore how users can be differentiated by analyzing natural gaze behavior while freely looking at images. Our approach is based on the observation that looking at different images, for example, a picture from your last holiday, induces stronger emotional responses that are reflected in gaze behavior and, hence, are unique to the person having experienced that situation. We collected gaze data in a remote study (N = 39) where participants looked at three image categories: personal images, other people’s images, and random images from the Internet. We demonstrate the potential of identifying different people using machine learning with an accuracy of 85%. The results pave the way for a new class of authentication methods solely based on natural human gaze behavior.

http://mkhamis.com/data/papers/abdrabou2024usec.pdf

To obtain valid gaze data, we conducted the study remotely. We utilized the Gazerecorder API1 with a frame rate of 33 Hz. The GazeRecorder API is designed explicitly for Webcambased eye-tracking, integrated within web browsers. We implemented a website using HTML, CSS, and Javascript, hosted on our University server, where we integrated the eye tracker