Research subject: Control methods for virtual objects through the implementation of gaze direction monitoring. Objective: To investigate control methods for complex objects, including the main methods, models, and algorithms for tracking gaze direction and the possibilities of using a webcam to control virtual objects. Research methods: The study employs a systemic approach to analyze the system, machine vision and augmented reality methods, as well as mathematical modeling to determine the optimal method for processing input data samples.

https://openarchive.nure.ua/server/api/core/bitstreams/69fe61be-e240-4003-9fe1-f4268721e739/content

The sufficiently slowed development of Ukraine’s economy was recorded over the past few years even before the start of Russia’s full-scale invasion, but it suffered the most losses after February 24, 2022. The forced relocation of research and production centers to the west of Ukraine and beyond significantly affected the software development industry. However, it also significantly accelerated the development and implementation of technological solutions related to the implementation of remote interaction between humans and hardware-software complexes, which find their application in various fields. One such direction is interaction with virtual objects.

Usually, control of virtual objects is carried out using standard controls – keyboard, mouse, joystick, steering wheel, or their analogs. Systems oriented towards human motion recognition are considered very progressive technologies, but another element that can be effectively used to control such objects is the direction of human gaze.

The main part of information about the surrounding world is perceived by a person through vision. Elements of the visual system – the eye, nerve, and visual analyzer of the brain – are closely related, so studying the trajectory of eye movement allows conclusions to be made about the process of recognizing individual images and mental processes as a whole. Tracking the direction of gaze also allows building fundamentally new interfaces of interaction between humans and technical means. In this regard, the development of systems that use the direction of gaze to control complex objects is a relevant scientific and technical problem.

Eye-tracking technologies are used in various computer interface design systems. The fixation time of the eye and the density of the trajectory allow conclusions to be made about which elements of the object being viewed are given the most attention.

Modern directions of application of eye-tracking systems in software engineering can be divided into two main parts. These are systems that carry out passive collection and analysis of information about eye movement during the experiment. This field includes the main commercial use of eye-tracking systems at the moment – evaluation of the visual convenience of application interfaces and web pages. This allows taking into account during the development of the software system objective information about which interface elements the user most often pays attention to when working with the program. In medicine, eye-tracking systems are used for diagnosing nervous system diseases – with some of them, the movements of the eyes of a sick and healthy person differ significantly. On the other hand, eye-tracking systems can be used for personality identification, since the nature of eye movements is purely individual, like handwriting, but these movements are almost impossible to imitate.

Modern operating systems (for example, Windows 11) are already adapted to the use of certain types of eye-tracking systems, which imply the presence of additional hardware. Such systems include, for example, Tobii Eye Tracker. At the same time, certain types of such systems should be considered those that allow using conventional means of communication to implement eye-tracking systems – smartphones and webcams.

The aim of the study is to analyze the methods of using eye-tracking systems to control virtual objects, which can include the menu system of the software system.

The subject area of the study, given the outlined problems, is the methods of controlling complex objects, which allows focusing on the feasibility of using the results of the work carried out in various technical systems.

GazeRecorder (https://gazerecorder.com/gazerecorder/) is a free program that uses a webcam to track user eye movements and their use for controlling virtual objects. It allows users to interact with the computer using eye movements, such as selection, click, drag, and others. The main problem lies in the implementation using a client-server architecture – recognition takes place on a cloud service, which imposes certain restrictions on use.

Windows Eye Control is a tool built into the Windows operating system for contactless control of virtual objects using eye-tracking technology with the use of external controllers. It allows users to perform various actions using eye movements.

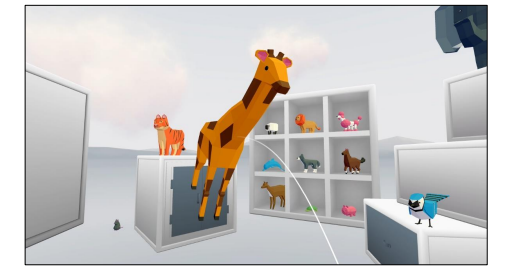

These and some other existing methods of contactless control of virtual objects using eye-tracking technology have their features and applications in various fields. They can be used in various fields, such as virtual reality, virtual medicine, virtual navigation, and others, and allow users to interact with virtual objects due to eye movements, facial expression recognition, and others.

Considering the specifics of the proposed study, the method of controlled experiment was chosen. The basic environment for execution has the following set of characteristics:

- CPU: Intel Core i5-1135G7;

- RAM: 16 GB;

- VRAM: 4 GB;

- Monitor SyncMaster 225bw (22″), resolution 1680×1050;

- Webcam Logitech C615 HD;

- OS: Windows 10.

For conducting experiments, JavaScript libraries GazeRecorder and WebGazer were chosen, which provide their own API for using methods of determining the direction of users’ gaze, which can be applied for controlling virtual objects in a web-oriented environment.

GazeRecorder API is a JavaScript interface for interacting with web applications and tracking user interaction with web applications using gaze tracking and eye gesture recognition. It provides advanced gaze tracking capabilities, gaze data analysis, eye gesture tracking, and other functions for implementing various user interaction scenarios using conventional webcams.

WebGazer.js is a JavaScript library for using eye-tracking technology in web applications. It allows to detect and track user eye movements using a webcam and analyze this information for various purposes, such as interaction with the application interface or collecting statistics about the user’s gaze. It provides advanced gaze tracking capabilities, gaze data analysis, eye gesture tracking, and other functions that can be used to implement various user interaction scenarios.

In general, both libraries include:

- Gaze tracking: they allow to detect and track user eye movements using a webcam, which collects data about the gaze position (coordinates on the screen), which allows to analyze and use this information for interaction with the application;

- Eye gesture recognition: the libraries’ API provides the ability to track various eye gestures, such as blinking, gaze diversion, slow eye closure, and others, which allows to use these gestures to implement touchless interaction.

The use of GazeRecorder API is based on a server-oriented architecture: the video stream from the webcam is delivered to a remote server for storage and processing of gaze data.

GazeRecorder uses a webcam to track and record which part of the screen the user is looking at, and then creates a heat map based on this data. GazeRecorder is quite accurate, but requires quality calibration.