Eye-gaze-based interaction is a promising modality for faster and seamless hands-free (also known as contactless or touchless) interaction [108]. It enables people with limited motor skills to interact with computer systems without using the hands. It is also beneficial in Situationally-Induced Impairments and Disabilities , when the hands are incapacitated due to reasons such as performing a secondary task, minor injuries, or unavailability of a keyboard. Hands-free interaction is also of a particular interest in situations when touching public devices is to be avoided to prevent the spread of an infectious disease. Eye tracking technologies measure a person’s eye movements and positions to understand where

the person is looking at any given time. In the past, eye tracking required expensive, often nonportable extramural devices, which were slow and error prone . Recent developments have made eye tracking more affordable, portable, and reliable. Modern algorithms can track eyes using webcams almost as fast and accurately as commercial tracking technologies . The most common application of eye tracking is to direct control a mouse cursor using eye movements. While the idea of performing tasks simply by looking at the interface is empowering, eye tracking has yet to become a pervasive technology due to the “Midas Touch” problem, which refers to the classic eye tracking problem where the system cannot distinguish between users simply scanning the items versus their intention to select them, resulting in unwanted selections wherever the user looks, making the system unusable. One solution to this problem is to use a different action to activate selection. The most commonly used selection method with eye tracking is dwell, where

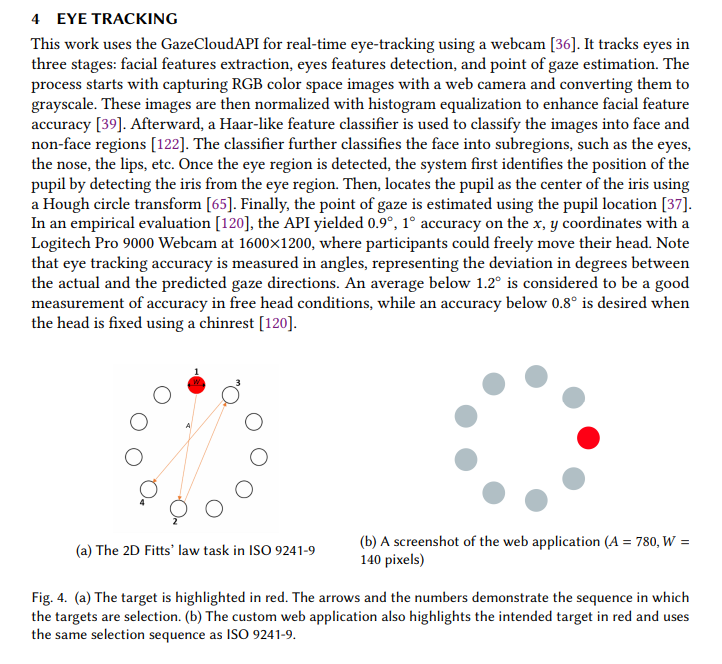

users look at a target for 100–3,000 ms to select it. It is, however, difficult to pick the most effective dwell time for a population since a short dwell time makes the system faster but increases false positives, while a long dwell time makes the system slower and causes users physical and cognitive stress . Many alternatives have been proposed to substitute dwell, including head and gaze gestures, blinking, voluntary facial muscle activation, brain signals, and foot pedals. Most of these approaches either use external, invasive hardware that are not yet scalable in practical situations or exploit unnatural behaviors that can cause users irritation and fatigue. Speech is promising but not reliable in noisy places (e.g., when listening to music). Users are also hesitant to use speech when in public places. Besides, speech does not work well with people with severe speech disorders since it relies on the sound produced by the users. In this work, we investigate silent speech as an alternative selection method for eye-gaze pointing. Silent speech is an image-based language processing method that interprets users’ lip movements into text. We envision several benefits of using silent speech commands as a selection method. First, it does not require the use of external hardware since both eye tracking and silent speech recognition can occur through the same webcam. Second, silent speech does not rely on acoustic features, thus can be used in noisy places or in places where people do not want to be disturbed. Although outside the scope of this work, silent speech can also accommodate people with speech disorders. The contribution of our work is three-fold. First, we propose a stripped-down image-based model that can recognize a small number of silent commands almost as fast as stateof-the-art speech recognition models. Second, we design a silent speech-based selection method and compare it with other hands-free selection methods, namely dwell and speech, in a Fitts’ law experiment. We follow-up on this by conducting another study investigating the most effective screen areas for eye-gaze pointing in terms of throughput, pointing time, and error rate. Finally, we design a silent speech-based menu selection method for eye-gaze pointing and evaluate it in an empirical study

https://www.theiilab.com/pub/Pandey_ISS2022_Silent_Eye_Gaze_Pointing.pdf